It’s Here! VIVERSE’s New AI Tools To Boost Creative Workflow, Not Replace It

TL;DR

- Object AI: Turn text or reference images into 3D objects, export standard files, and use them anywhere. Try it now at VIVERSE Object AI.

- Scene AI: Upload a video and our tools reconstruct a photoreal 3D scene using Gaussian splatting. Request access to pilot Scene AI for your team.

- Open web: View, share, and embed with a link in your browser, no app required, supported by VIVERSE Studio engine support and Studio 3D publishing.

- In light of these new features, we’ve updated our Terms of Use to reflect the following clarifications:

- VIVERSE does not use any user data—such as generative inputs, outputs, uploaded content, or similar—to train the AI tools we offer; nor does the third-party service we contract with for Object AI. Your content is your own.

- Read our blog post covering the Terms of Use update and what it means for creators.

- Review the Terms of Use in full.

Meet VIVERSE’s AI Solution

What if 3D content felt as quick as a thought, as shareable as a link? That’s the promise of VIVERSE AI, and its two new features: Object AI and Scene AI.

Introducing two creation paths into the browser era, VIVERSE is enabling teams to transition from idea to evaluation without requiring any installation or lengthy wait times. You keep portability through standard formats, you publish with a link, and you choose how far to take a concept, from quick prototypes to production-ready assets.

The best part? VIVERSE doesn’t use your creations as data to train our partnered LLM—not the worlds you build, not the assets you generate, and not your input content (text, images, or video). Your prompts, your generated object, your content. Period.

Up next, a closer look at what each tool actually does.

What’s new

VIVERSE AI has two ways to move ideas into 3D. One helps you make things fast. The other helps you bring the real world into your workflow for review and planning.

Nothing beats seeing it for yourself, though, so here are simple ways to evaluate both tools.

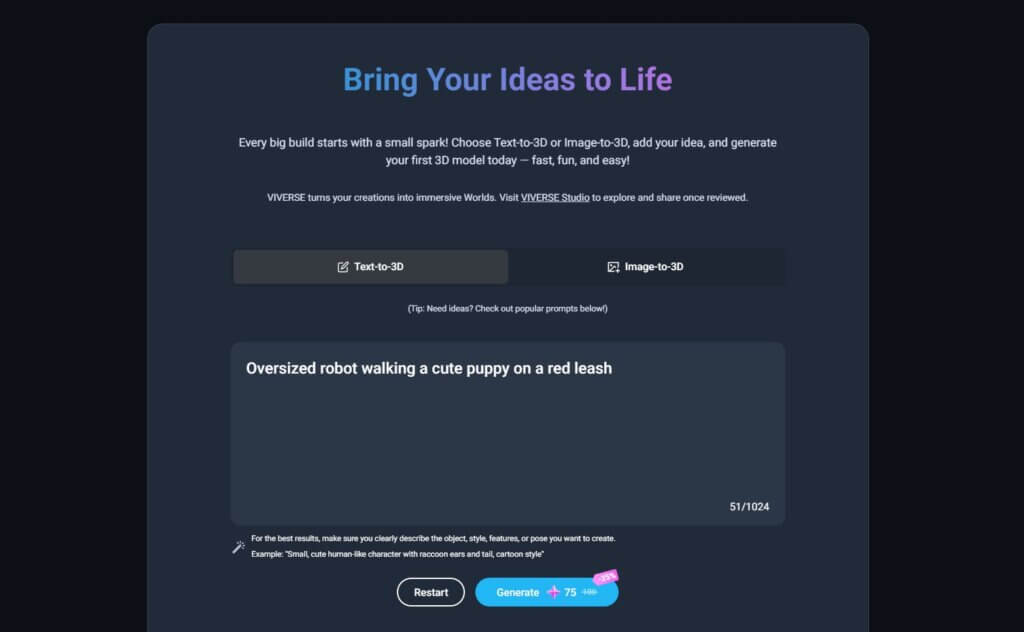

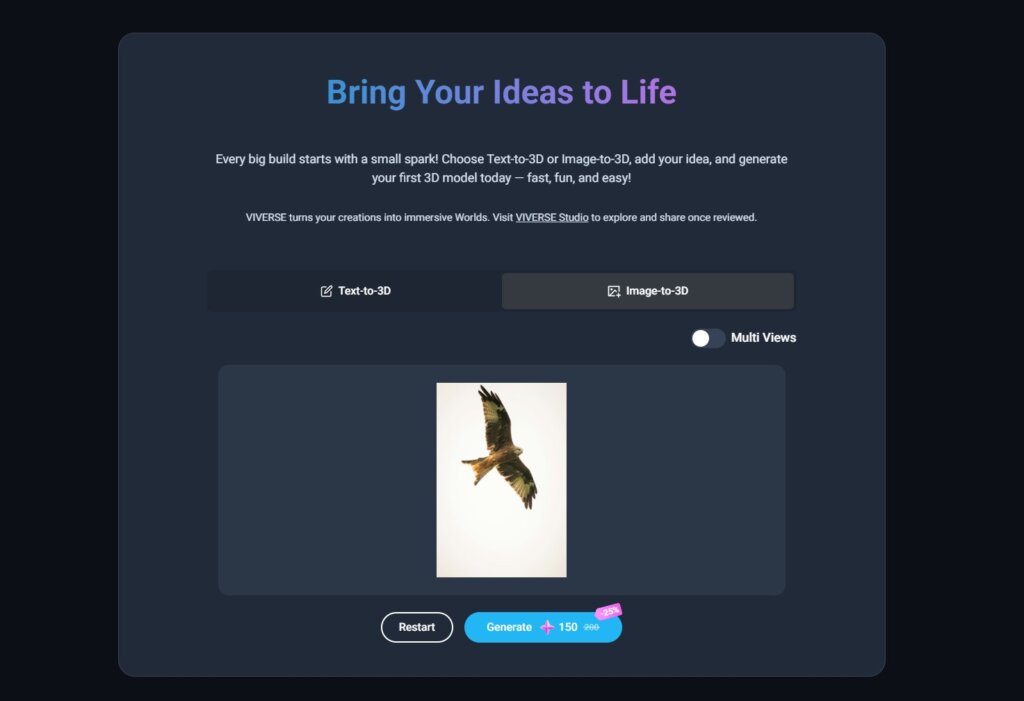

Object AI

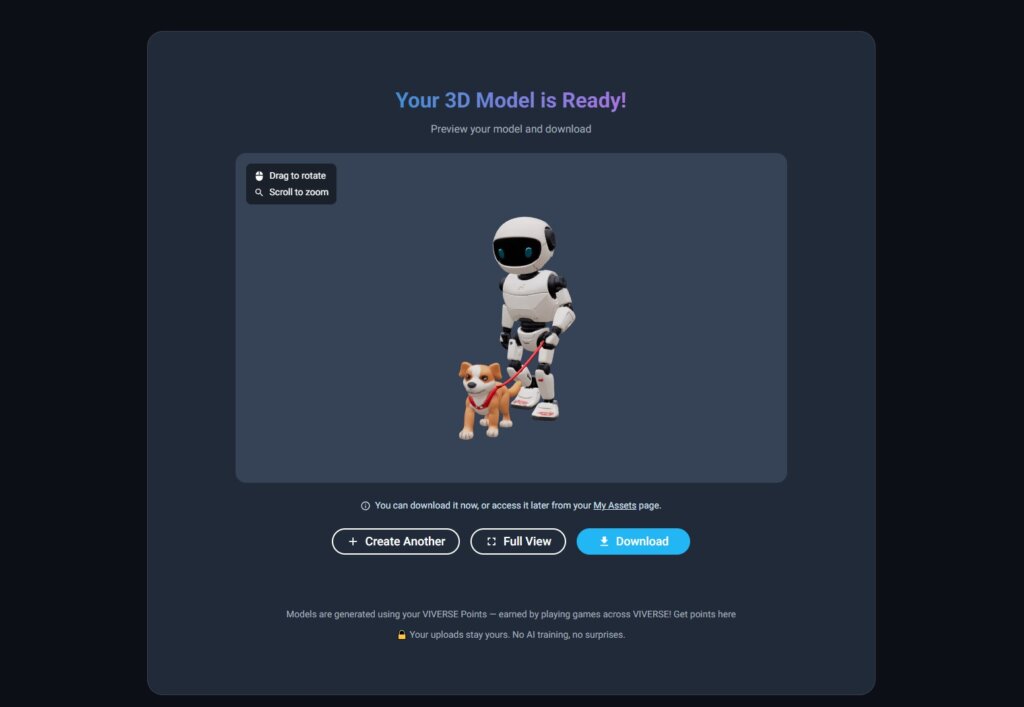

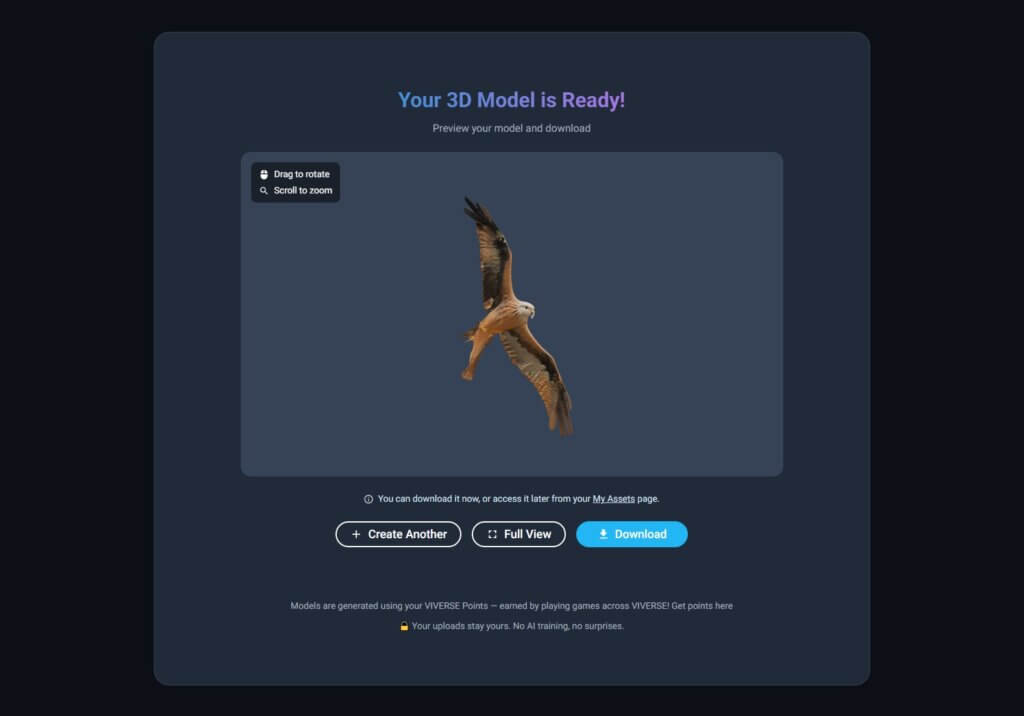

When you need a 3D object quickly, start with a written prompt or a few reference images. Object AI generates a portable model you can export, manage, and drop into your world. It’s fast for prototyping and practical for production because the outputs are standard object files you’re used to using.

- Inputs: text prompts, or one-to-several reference images

- Output: standard 3D format for portability; GLB file provided

- Access: generate using VIVERSE Points

- Privacy and ownership: you own your input and the generated object/model

Try now! Generate with Object AI

Examples of Object AI’s ability to turn your text prompt into a 3D model

Example of Object AI’s ability to turn your image prompt into a 3D model

Use your results as your final product or download and further refine in Blender for optimization through retopology.

Scene AI

Some stories are better told in context. Scene AI transforms a video into a photoreal reconstruction you can view in the browser. It’s a fast way to align teams, preview spaces, and gather feedback without a site visit.

- Input: a slow, steady loop of your space on a phone camera

- Output: a Gaussian splat digital twin, delivered as a PLY file that can be quickly imported to, and previewed in, a VIVERSE world.

- Access: available by request for pilots and enterprise delivery; consumer access coming.

Next Step: Request access

Let’s check out an enterprise example

Let’s jump in and learn about our enterprise-level example of Scene AI technology in action. This example is an exciting showcase of how a video can be transformed into a 3D experience. And not just that, but an experience that you can virtually walk in and explore.

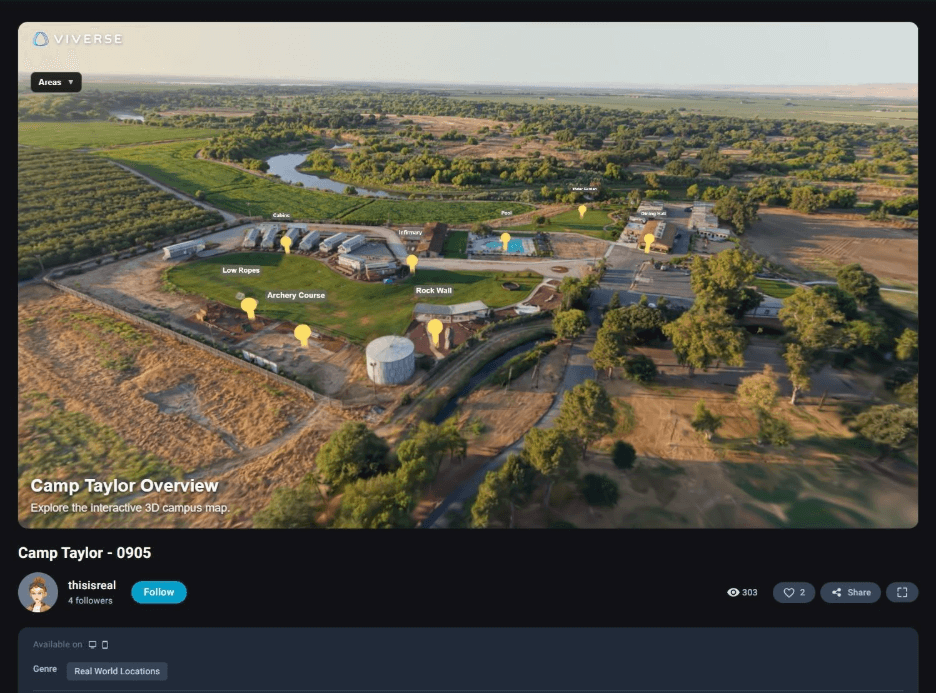

Fellow VIVERSE team members took bird’s-eye aerial drone footage of Camp Taylor Riverfront, which was then turned into a Gaussian splat with Scene AI. From there, it’s been developed into multiple explorable worlds, tied together as a large, expansive digital twin of the real location. While still an active project, we can let you in on some amazing teasers of the original drone footage, as well as what it looks like to be poolside at Camp Taylor.

The video footage was then converted and exported for use with PlayCanvas. Collision areas were put into place among other adjustments before being published on VIVERSE as a functioning explorable 3D world.

In our screenshots below, you’ll see markers indicating areas of Camp Taylor that are virtually represented and available to visit on VIVERSE. The markers give viewers the ability to choose where they take that first step of exploration into the 3D environment, which was previously only available as a video.

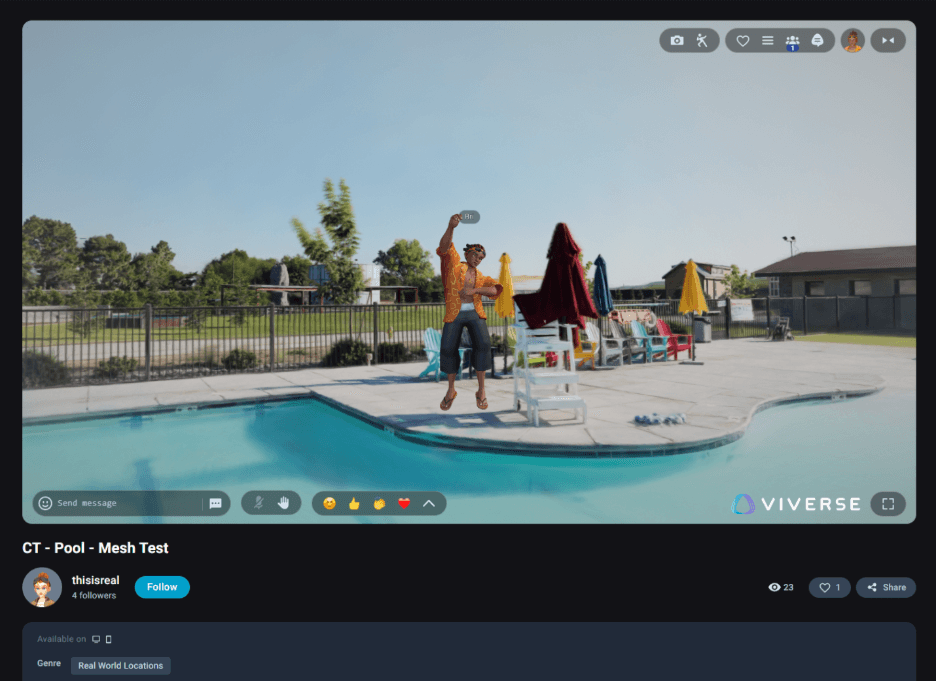

Here’s one of our avatars hanging poolside at Camp Taylor on VIVERSE. The goal is to transform the entire map into a multi-location engagement opportunity for visitors to virtually tour the camp, its amenities, and more.

Stay tuned for a post from the project director, exploring the inspiration and process of converting Camp Taylor Riverfront into its digital twin on VIVERSE.

Curious how these pieces fit together in practice? Let’s walk through each flow.

How it works

Object AI flow. Start with a prompt or upload reference images. Generate your model, then download the GLB or manage it in My Assets. When you’re ready to share or test in a live space, you can publish on the open web using VIVERSE Studio (learn more about VIVERSE Studio and our game engine support).

Scene AI flow. Record a slow loop of your space or object, then submit the video through the Scene AI intake process, available after requesting access. Our system reconstructs the scene as a 3D Gaussian splat and returns a private web preview. From there, you can download for refinement, publish with VIVERSE Studio, or discuss enterprise delivery options with our team.

Why AI, and why now?

Teams across marketing, training, operations, and creative industries need 3D faster than traditional pipelines allow. Recent advances in Gaussian splat technology make high-quality object and scene reconstruction possible. Paired with open-web delivery and more people can see, review, and act on 3D content in minutes, not weeks or months.

Want to learn more about Gaussian splatting? Check out our Related Reads section at the end of this post.

How VIVERSE compares

- Privacy and ownership: Your prompts, uploaded media, and resulting 3D objects belong to you, not us. You own your content. For further clarification, please review our recently updated Terms of Use or read our announcement of the update for a quick review.

- Open web publishing: Share a link, embed on sites, and view on any device with a browser—smartphones, tablets, laptops, PCs, and VR headsets—with no installs needed, all via VIVERSE Studio and our broad game engine support.

- Points on-ramp: Earn starter points through missions, then generate your first object in minutes. Learn more about missions, points, and how to earn them.

- Enterprise delivery: Convert objects and environments recorded via video into 3D Gaussian splats for use in your world(s). Review the processed footage, import the file into your 3D world, then tap publish to launch the finished experience. Build, iterate, and showcase your content, all within the same platform.

Get started

FAQ

Q: Do I own the models I create?

A: Yes! You own the models and scenes you generate. VIVERSE does not own your content. For more information, please review the Terms of Use.

Q: Are uploads used to train AI?

A: VIVERSE does not use your uploads, text-based inputs, or resulting generations to train AI. Third parties may require separate consent, however, in an opt-in capacity.

Q: What formats can I export?

A: GLB is a portable standard that works well across engines and is the format used when exporting Object AI generated models. For creators using Blender, see the Blender to VIVERSE Create guide. Scene AI outputs files as a PLY file.

Q: Is Scene AI self-serve?

A: Scene AI is available by request. Start on the Scene AI page to request access.

Related Reads

Curious how video turns into a 3D scene you can preview in your web browser, or why everyone’s talking about Gaussian splatting these days? These quick primers give you just enough background to make sense of the tech.

- The Verge overview: https://www.theverge.com/2025/1/19/24345491/gaussian-splats-3d-scanning-scaniverse-niantic

- CableLabs explainer: https://www.cablelabs.com/blog/gaussian-splatting-immersive-scenes

- 3D Gaussian Splatting research paper: https://arxiv.org/abs/2308.04079

Note: These are optional reads and are not required to use VIVERSE AI or VIVERSE itself.