How UNIST DogRecon Turns One Photo Into a Moving 3D Dog

If you’ve ever had a pet, you’ve probably sighed at least once: “Why are their lives so short?” Because their time with us is fleeting, many pet owners fill their phones with thousands of photos, capturing every precious moment with their furry companions.

Capturing memories you could step into is a compelling idea. Apple’s Spatial Photos explore that with depth-enhanced images. They don’t create animated 3D models, though, and that’s where DogRecon comes in.

What is DogRecon?

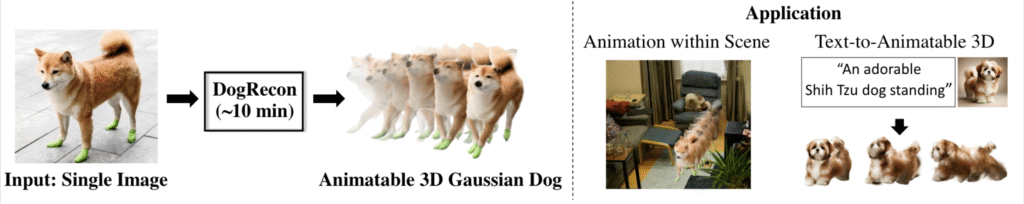

This is where the AI technology “DogRecon” from the Ulsan National Institute of Science and Technology (UNIST) in Korea comes into play. You don’t need to circle your pet with a camera or collect piles of footage; with just one photo, you can create a 3D model of your dog that moves. So, for those of you who can’t resist snapping photos of every cat and dog you see, DogRecon lets you recreate your furry friends in a 360-degree, lifelike form!

The name “DogRecon” combines “Dog” and “Reconstruction,” referring to AI technology specifically for creating 3D dog models. Developed by Professor Kyungsoo Cho’s team at UNIST’s Graduate School of Artificial Intelligence, this tool tackles the tricky problem of 3D dog modeling. UNIST is a leading research university in Korea, specializing in technology.

Why 3D dogs are tricky

Creating a 3D model of a dog isn’t as easy as it sounds. Dogs come in many breeds and appearances, with varying sizes, fur, and gaits. Their four-legged structure often means joints are hidden by fur in photos, leading to AI misjudgments and resulting in models that look like a blurry mess.

How DogRecon works from one photo

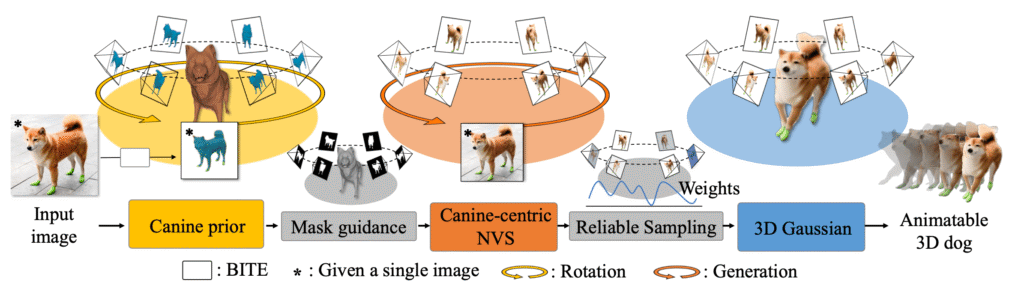

The DogRecon process begins with a single Shiba Inu photo and uses a canine knowledge model along with AI image synthesis to construct a basic 3D dog structure. It then renders this structure into a lifelike 3D Shiba model using 3D Gaussian Splatting.

To solve these issues, DogRecon combines two core technologies: the Canine Prior Knowledge Model and Canine-centric Novel View Synthesis (NVS).

In simple terms, the Canine Prior Knowledge Model is like an encyclopedia of dogs, containing a vast amount of trained dog image data. It helps determine your pet’s breed, body proportions, and skeletal structure, laying the groundwork for 3D modeling. The Canine-centric NVS enables DogRecon to simulate the dog from various angles, including its sides, tail, and their little paws hidden in fur, all generated by AI, eliminating the need for traditional surround photography or hundreds of photos or videos.

These simulated multi-angle images are integrated into a 3D point cloud model, which is then rendered using 3D Gaussian Splatting, making the final model look both natural and fluffy.

DogRecon can create a 3D model from a single Husky photo, whereas other models require videos or hundreds of images to achieve the same result. (Source: Kyungsoo Cho)

What makes DogRecon stand out

The research team compared DogRecon with existing pet modeling technologies. For instance, BANMo requires over 170 frames of video but does not support output animations. In contrast, Fewshot-GART needs only three images, but the results aren’t as realistic as those of DogRecon.

DogRecon not only accurately recreates a dog’s appearance while maintaining aesthetic appeal, but it also offers convenience: with just one photo, you can generate a 3D model of your dog. It even supports “text-generated animation,” allowing your dog to walk, run, or wag its tail on screen with simple commands, as if your pet is leisurely strolling towards you.

Where this could go next

Professor Kyungsoo Cho from UNIST stated, “With over a quarter of households owning pets in Korea, we aim to extend human-centric 3D reconstruction technology to pets. DogRecon will become a tool accessible to everyone, allowing pet owners to recreate their beloved dogs in digital spaces and even animate them.”

The potential applications of this technology are exciting. Once you can easily create 3D models of your pets, new scenarios will emerge. From remembering beloved pets that are no longer with us to creating interactive virtual pet characters, we can enjoy and engage with them on another level. You can bring them into VR, AR, or publish in VIVERSE virtual spaces, making them your virtual avatar’s adorable companions and continuing your bond online.

To stream dense assets on the open web when you’re ready, try Polygon Streaming for free. Creators and research teams interested in publishing results, demos, or interactive experiences can also apply to the VIVERSE Creator Program.

Availability and limitations

DogRecon is not yet available for public testing. However, if a trial version or open-source release becomes available, we’ll update you right away!

Learn more

- DogRecon project page

- Prof. Kyungsoo Cho’s YouTube channel

- Asiae news article on DogRecon

- Journal article: DogRecon: Canine Prior-Guided Animatable 3D Gaussian Dog Reconstruction From A Single Image

FAQ

- What is DogRecon?

DogRecon is an AI tool developed by UNIST that generates a 3D moving dog model from a single photo. - How does DogRecon work?

It combines a canine prior knowledge model and novel view synthesis, then renders results with 3D Gaussian Splatting. - Can anyone use DogRecon now?

Not yet. The research team hasn’t released a public demo. - What makes DogRecon different from other methods?

DogRecon works with a single photo, while other models require multiple images or video frames.